Sentio AI Program

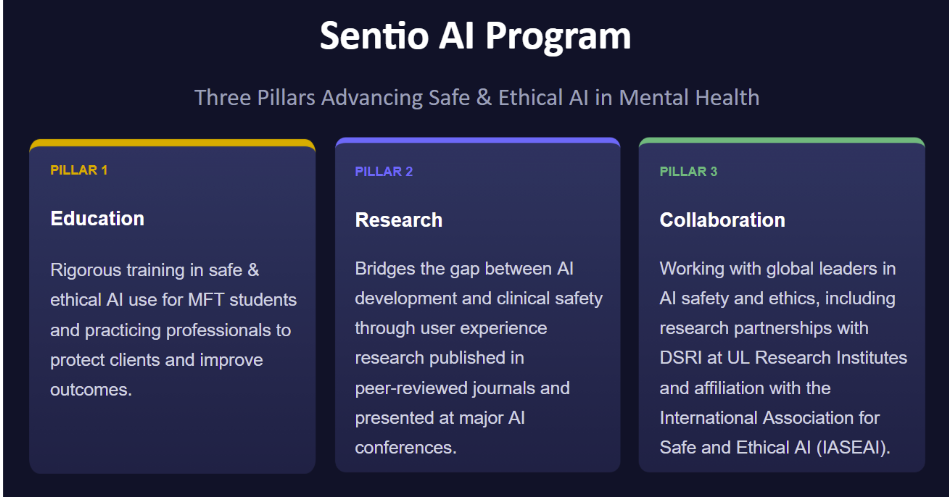

Sentio University advances the scientific discourse on safe AI, built upon a foundation of peer-reviewed research and partnerships with world-leading AI safety organizations.

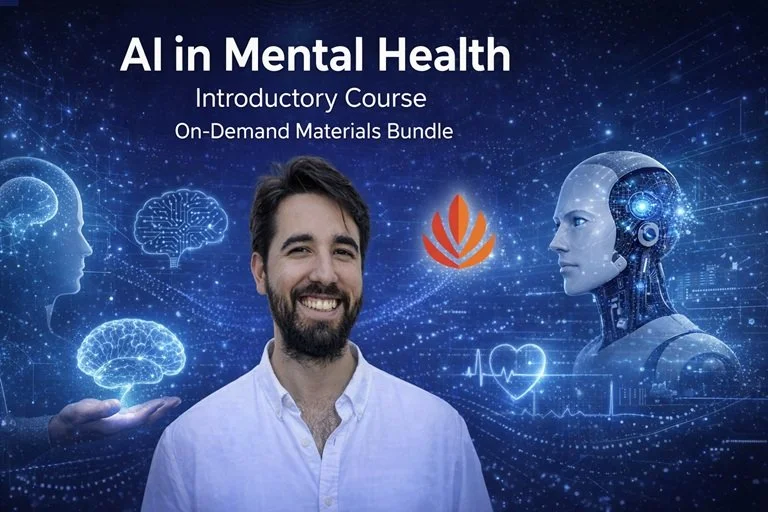

Setting Educational Standards: We are the first to develop a hands-on AI Certification Course that trains clinicians to use major general-use AI programs safely and ethically as tools for clinical reasoning without sacrificing human empathy.

Leading with Evidence-Based Research: Our broad range of research includes a national survey identifying AI as a major mental health provider, a clinical safety evaluation comparing how leading LLMs handle crisis scenarios, and one of the largest qualitative studies on how users actually experience AI for mental health support.

Collaborating with Global AI Safety Leaders: Through our collaboration with the Digital Safety Research Institute (DSRI) at UL Research Institutes and as an affiliate of International Association for Safe and Ethical AI (IASEAI), Sentio is working to ensure that the specific needs of the mental health field are represented in global AI governance discussions.

See the Sentio Statement on AI and sign up for email list to be alerted of new events and research.

See free recorded webinars on AI in Mental Health on our YouTube channel.

Sentio Mental Health education training webinar on Safe and Ethical use of AI for Therapists

Sentio webinar on AI Mental Health Education Training and Research